I have seen a number of installations of Openfiler on ESX, but I haven’t seen any that fully show the installation I was looking for.

In my case, I have an ESX with 2 – 1 TB disks. I don’t have a RAID controller in the machine, so the disks just show up in ESX as individual drives.

In order to provide redundancy of any data stored on these local disks, I decided to install Openfiler.

Openfiler is a Linux distribution that allows any disks attached to it (in this case virtual disks – or VMDKs) to be shared out as iSCSI or NFS. I am going to use iSCSI as it performs better in Openfiler (in my opinion). This means that even the ESX server that is hosting the Openfiler will see its’ own disks through the virtualization layer of Openfiler.

This has the side benefit of making the local disk available to all other ESX servers as shared storage. However, the main reason I wanted to do this was redundancy – I want to make the 2 individual disks look like a single RAID 1 volume and thus have the data stored in 2 different places.

The big difference in my case is that I am going to make 2 boot disks and mirror them – however, one boot disk will be on local storage and the other on existing shared storage. The reason is that if a physical drive in the ESX server was to die, and it happened to be the boot disk for the ESX server, I would have a hard time getting the Openfiler back up. This way, I just stick the other disk into another server (or reinstall ESX on another disk), re-attach the data volumes to the Openfiler and I am back in business!

On to the implementation!

You could potentially use the ESX appliance available from www.openfiler.com, but I decided to use the ISO and create a new installation. As I didn’t have 64 bit hardware, I used the 2.3 x86 version of Openfiler.

I choose Red Hat Enterprise Linux 5 (32bit) as the OS Type and gave it 512 MB of RAM.

When creating my new Openfiler VM, I gave it 5 GB of disk on different existing SHARED storage and 5 GB of disk on one of the local disks. Not sure that I would want to create both boot disks (we will end up with 2) on the local storage – if one of the physical disks died, and I lost the ESX, I want to be able to get my data back by just sticking the other physical disk into another server and re-attaching the disk to the Openfiler. Anyway…that is your decision.

I am going to have one boot disk on separate shared storage and one on the local storage.

I begin the installation of Openfiler and skip the Media test.

After the initial steps we get to the partition manager. In our case, the hard disks are called:sda and sdb. This can be different in your case. We'll create the /boot partition first:

- Choose Manually partition with Disk Druid.

- Click New.

- Select the following options:

- File System Type: Software RAID

- Allowable Drives: sda

- Size (MB): 102

- Additional Size Options: Fixed Size

Now, repeat this (from step 2) for the other hard disk, sdb (selected in step 3). After that, make a RAID-1 partition from both individual partitions:

Now, repeat this (from step 2) for the other hard disk, sdb (selected in step 3). After that, make a RAID-1 partition from both individual partitions:

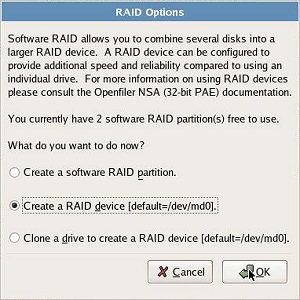

- Click RAID.

- Select Create a RAID device [default=/dev/md0], and click OK.

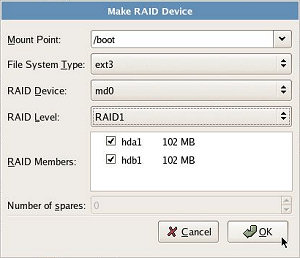

- Select the following options:

- Mount Point: /boot

- File System Type: ext3

- RAID Device: md0

- RAID Level: RAID1

- RAID Members: sda and sdb checked

That's our first RAID-1 partition. Now, repeat all these steps for the / partition. As for the /swap partition, create them on both disks but don't create a RAID-1 partition for them (just choose File System Type: swap in step 3 and skip step 4, 5 and 6).

That's our first RAID-1 partition. Now, repeat all these steps for the / partition. As for the /swap partition, create them on both disks but don't create a RAID-1 partition for them (just choose File System Type: swap in step 3 and skip step 4, 5 and 6).

Now manually assign a static IP, let the install finish and reboot.

Login as root using the password you assigned during installation.

Now we need to update to the latest version:

conary updateall

conary update kernel

reboot

In the unfortunate case that one of the hard disks fails, we should make them both bootable. This can be done with GRUB (GRand Unified Bootloader):

$ grub

device (hd0) /dev/sda

root (hd0,0)

setup (hd0)

device (hd1) /dev/sdb

root (hd1,0)

setup (hd1)

quit

Now we want to install VMware Tools

Guest->Install VMware Tools

cd /root

mount /dev/cdrom /mnt/cdrom

cp /mnt/cdrom/VMwareTools-4.0.0-208167.tar.gz .

tar -xvzf VMwareTools-4.0.0-208167.tar.gz

cd vmware-tools-distrib

If you run ./vmware-install.pl at this point, you will find that a number of modules are already installed and must be removed before you can proceed. So….

rm /lib/modules/2.6.29.6-0.30.smp.gcc3.4.x86.i686/kernel/fs/vmblock/vmblock.ko

rm /lib/modules/2.6.29.6-0.30.smp.gcc3.4.x86.i686/kernel/fs/vmhgfs/vmhgfs.ko

rm /lib/modules/2.6.29.6-0.30.smp.gcc3.4.x86.i686/kernel/drivers/misc/vmci/vmci.ko

rm /lib/modules/2.6.29.6-0.30.smp.gcc3.4.x86.i686/kernel/drivers/misc/vmmemctl/vmmemctl.ko

rm /lib/modules/2.6.29.6-0.30.smp.gcc3.4.x86.i686/kernel/drivers/net/vmxnet/vmxnet.ko

./vmware-install.pl

Pick the defaults for all questions except answer /usr/bin/make for the gcc compiler question.

What is the location of the "gcc" program on your machine? /usr/bin/make

reboot

Add additional disks, RAID them and present them as LUNs to ESX in the normal fashion. If I get time, I will show this part too.